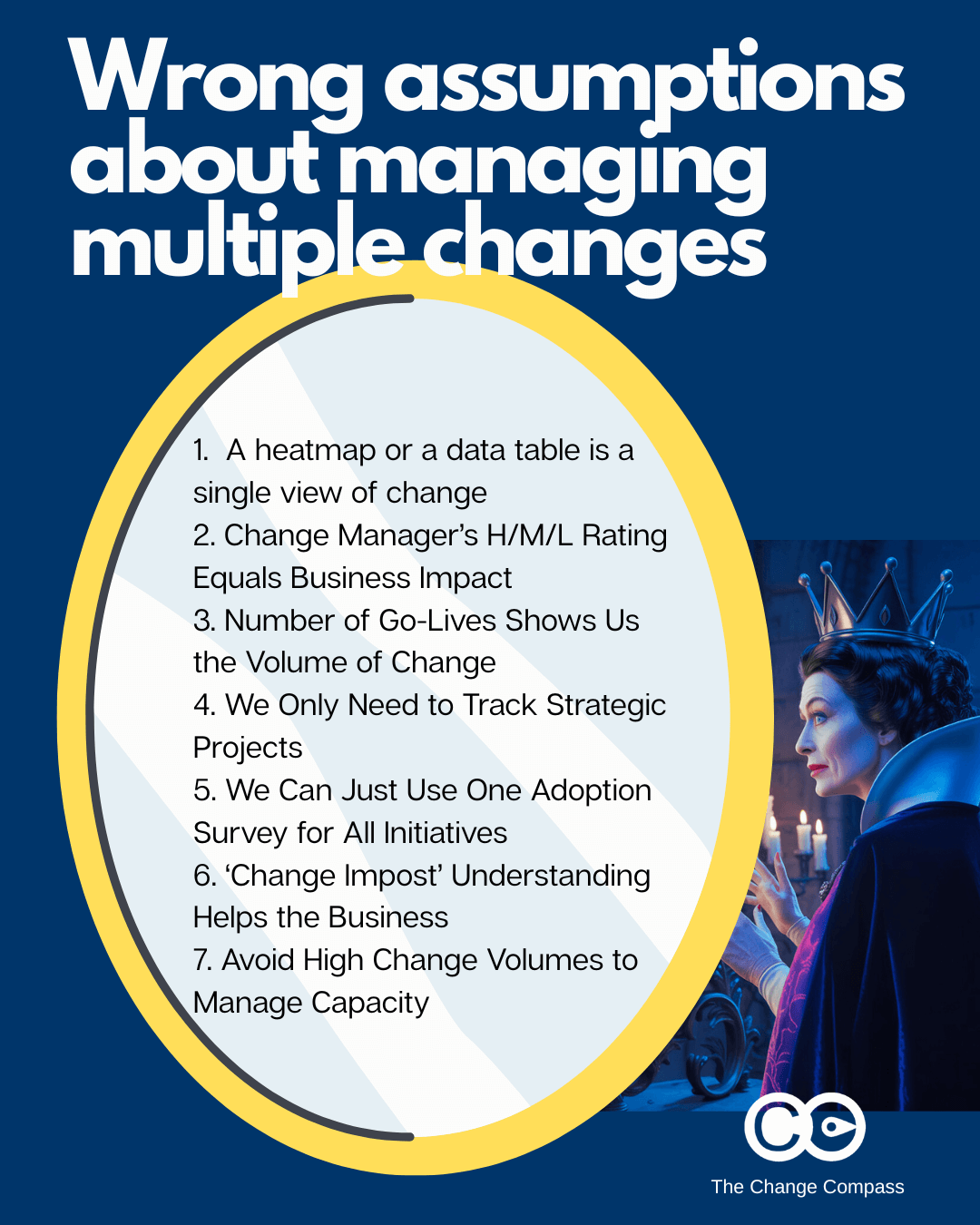

In today’s dynamic business environment, managing multiple changes simultaneously is the norm, not the exception. As change transformation experts/leaders, we’re expected to provide clarity, reduce disruption, and drive successful adoption—often across a crowded portfolio of initiatives. In this high-stakes context, it’s tempting to lean on familiar tools and assumptions to simplify complexity. However, some of the most common beliefs about managing multiple changes are not just outdated—they can actively undermine your efforts.

Here we explore seven widespread assumptions that can lead change leaders astray. By challenging these myths, you can adopt more nuanced, effective approaches that truly support your people and your business.

Assumption 1: A Heatmap or Data Table is a Single View of Change

Heatmaps and data tables have become go-to tools for visualising change across an organisation. At a glance, they promise to show us where the “hotspots” are—those areas experiencing the most change. But is this single view really giving us the full picture?

Why This Assumption is Wrong

1. Not All Change is Disruptive—Some is Positive

A heatmap typically highlights areas with high volumes of change, but it doesn’t distinguish between positive and negative impacts. For example, a new digital tool might be seen as a “hotspot” simply because it affects many employees, but if it makes their jobs easier and boosts productivity, the overall experience could be positive. Conversely, a smaller change that disrupts workflows or adds complexity may have a much larger negative impact on a specific group, even if it doesn’t light up the heatmap. Depth of understanding beyond the heatmap is key.

2. The Data May Not Show the Real ‘Heat’

The accuracy of a heatmap depends entirely on the data feeding it. If your ratings are based on high-level, generic ‘traffic-light’ impact assessments, you may miss the nuances of how change is actually experienced by employees. For instance, a heatmap might show a “red zone” in one department based on the number of initiatives, but if those initiatives are well-aligned and support the team’s goals, the actual disruption could be minimal.

3. The Illusion of Completeness

A single view of change suggests that you’ve captured every initiative—strategic, operational, and BAU (Business As Usual)—in one neat package. In reality, most organisations struggle to maintain a comprehensive and up-to-date inventory of all changes. BAU initiatives, in particular, often slip under the radar, even though their cumulative impact can be significant. This is not to say that one always needs to aim for 100%. However, labelling this as ‘single view of change’ would then be an exaggeration.

The Takeaway

Heatmaps and data tables are useful starting points, but they’re not the whole story. They provide a high-level snapshot, not a diagnostic tool. Heatmaps should also not be the only visual you use. There are countless other ways to present similar data. To truly understand the impact of multiple changes, you need to go deeper—gathering qualitative insights, focusing on employee experience, and recognising that not all “hotspots” are created equal. Ultimately the data should tell you ‘why’ and ‘how’ to fix it.

Assumption 2: A Change Manager’s H/M/L Rating Equals Business Impact

It’s common practice to summarise the impact of change initiatives using simple High/Medium/Low (H/M/L) ratings. These ratings are easy to communicate and look great in dashboards. But do they really reflect the business impact?

Why This Assumption is Wrong

1. Oversimplification Masks Nuance

H/M/L ratings often blend a variety of factors: the effort required from business leads, subject matter experts (SMEs), sponsors, project teams, and change champions. These ratings may not be based solely—or even primarily—on employee or customer impact. For example, a “High” impact rating might reflect the complexity of project delivery rather than the degree of disruption felt by frontline staff.

2. Limited Decision-Making Value

A single, combined rating has limited utility for decision-making. If you need to focus specifically on employee impacts, customer experience, or partner relationships, a broad H/M/L assessment won’t help you target your interventions. It becomes a blunt instrument, unable to guide nuanced action.

3. Lack of Granularity for Business Units

For business units, three categories (High, Medium, Low) are often too broad to provide meaningful insights. Important differences between types of change, levels of disruption, and readiness for adoption can be lost, resulting in a lack of actionable information.

The Takeaway

Don’t rely solely on H/M/L ratings to understand business impact. Instead, tailor your assessments to the audience and the decision at hand. Use more granular, context-specific measures that reflect the true nature of the change and its impact on different stakeholder groups, where it makes sense.

Assumption 3: Number of Go-Lives Shows Us the Volume of Change

It’s easy to fall into the trap of using Go-Live dates as a proxy for change volume. After all, Go-Live is a clear, measurable milestone, and counting them up seems like a straightforward way to gauge how much change is happening. But this approach is fundamentally flawed.

Why This Assumption is Wrong

1. Not All Go-Lives Are Created Equal

Some Go-Lives are highly technical, involving backend system upgrades or infrastructure changes that have little to no visible impact on most employees. Others, even if small in scope, might significantly alter how people work day-to-day. Simply tallying Go-Lives ignores the nature, scale, and felt impact of each change.

2. The Employee Experience Is Not Tied to Go-Live Timing

The work required to prepare for and adopt a change often happens well before or after the official Go-Live date. In some projects, readiness activities—training, communications, process redesign—may occur months or even a year ahead of Go-Live. Conversely, true adoption and behaviour change may lag long after the system or process is live. Focusing solely on Go-Live dates misses these critical phases of the change journey.

3. Volume Does Not Equal Impact

A month with multiple Go-Lives might be relatively easy for employees if the changes are minor or well-supported. In contrast, a single, complex Go-Live could create a massive disruption. The volume of Go-Lives is a poor indicator of the real workload and adaptation required from your people.

The Takeaway

Don’t equate the number of Go-Lives with the volume or impact of change. Instead, map the full journey of each initiative—readiness, Go-Live, and post-implementation adoption. Focus on the employee experience throughout the lifecycle, not just at the technical milestone.

Assumption 4: We Only Need to Track Strategic Projects

Strategic projects are naturally top of mind for senior leaders and transformation teams. They’re high-profile, resource-intensive, and often linked to key business objectives. But is tracking only these initiatives enough?

Why This Assumption is Wrong

1. Strategic Does Not Always Mean Disruptive

While strategic projects are important, they don’t always have the biggest impact on employees’ day-to-day work. Sometimes, operational or BAU (Business As Usual) initiatives—such as process tweaks, compliance updates, or system enhancements—can create more disruption for specific teams.

2. Blind Spots in Change Impact

Focusing exclusively on strategic projects creates blind spots. Employees may be grappling with a host of smaller, less visible changes that collectively have a significant impact on morale, productivity, and engagement. If these changes aren’t tracked, leaders may be caught off guard by resistance or fatigue.

3. Data Collection Bias

Strategic projects are usually easier to track because they have formal governance, reporting structures, and visibility. BAU initiatives, on the other hand, are often managed locally and may not be captured in central change registers. Ignoring them can lead to an incomplete and misleading picture of overall change impact.

The Takeaway

To truly understand and manage the cumulative impact of change, track both strategic and BAU initiatives. This broader view helps you identify where support is needed most and prevents change overload in pockets of the organisation that might otherwise go unnoticed.

Assumption 5: We Can Just Use One Adoption Survey for All Initiatives

Surveys are a popular tool for measuring change adoption. The idea of using a single, standardised survey across all initiatives is appealing—it saves time, simplifies reporting, and allows for easy comparison. But this approach rarely delivers meaningful insights.

Why This Assumption is Wrong

1. Every Initiative Is Unique

Each change initiative has its own objectives, adoption targets, and success metrics. A generic survey cannot capture the specific behaviours, attitudes, or outcomes that matter for each project. If you try to make one survey fit all, you end up with questions so broad that the data becomes meaningless and unhelpful.

2. Timing Matters

The right moment to measure adoption varies by initiative. Some changes require immediate feedback post-Go-Live, while others need follow-up months later to assess true behavioural change. Relying on a single survey at a fixed time can miss critical insights about the adoption curve.

3. Depth and Relevance Are Lost

A one-size-fits-all survey lacks the depth needed to diagnose issues, reinforce learning, or support targeted interventions. It may also fail to engage employees, who can quickly spot when questions are irrelevant to their experience.

The Takeaway

Customise your adoption measurement for each initiative. Tailor questions to the specific outcomes you want to achieve, and time your surveys to capture meaningful feedback. Consider multiple touchpoints to track adoption over time and reinforce desired behaviours.

Assumption 6: ‘Change Impost’ Understanding Helps the Business

The term “change impost” has crept into the vocabulary of many organisations, often used to describe the perceived burden that change initiatives place on the business. On the surface, it might seem helpful to quantify this “impost” so that leaders can manage or minimise it. However, this framing is fraught with problems.

Why This Assumption is Wrong

1. Negative Framing Fuels Resistance

Describing change as an “impost” positions it as something external, unwelcome, and separate from “real” business work. This language reinforces the idea that change is a distraction or a burden, rather than a necessary part of growth and improvement. Stakeholders who hear change discussed in these terms may lead to the reinforcement of negativity towards change versus incorporating change as part of normal business work.

2. It Artificially Separates ‘Change’ from ‘Business’

In reality, change is not an add-on—it is intrinsic to business evolution. By treating change as something apart from normal operations, organisations create a false dichotomy that hinders integration and adoption. This separation can also lead to confusion about responsibilities and priorities, making it harder for teams to see the value in new ways of working.

3. There Are Better Alternatives

Instead of “change impost,” consider using terms like “implementation activities,” “engagement activities,” or “business transformation efforts.” These phrases acknowledge the work involved in change but frame it positively, as part of the ongoing journey of business improvement.

The Takeaway

Language matters. Choose terminology that normalises change as part of everyday business, not as an external burden. This shift in mindset can help foster a culture where change is embraced, not endured.

Assumption 7: We Just Need to Avoid High Change Volumes to Manage Capacity

It’s a common belief that the best way to manage organisational capacity is to avoid periods of high change volume—flattening the curve, so to speak. While this sounds logical, the reality is more nuanced.

Why This Assumption is Wrong

1. Sometimes High Volume Is Strategic

Depending on your organisation’s transformation goals, there may be times when a surge in change activity is necessary. For example, reaching a critical mass of changes within a short period can create momentum, signal a new direction, or help the organisation pivot quickly. In these cases, temporarily increasing the volume of change is not only acceptable—it’s desirable to reach significant momentum and outcomes.

2. Not All Change Is Equal

The type of change matters as much as the quantity. Some changes are minor and easily absorbed, while others are complex and disruptive. Simply counting the number of initiatives or activities does not account for their true impact on capacity.

3. Planned Peaks and ‘Breathers’ Are Essential

Rather than striving for a perfectly flat change curve, it’s often more effective to plan for peaks and valleys. After a period of intense change, deliberately building in “breathers” allows the organisation to recover, consolidate gains, and prepare for the next wave. This approach helps maintain organisational energy and reduces the risk of burnout.

The Takeaway

Managing capacity is about more than just avoiding high volumes of change. It requires a strategic approach to pacing, sequencing, and supporting people through both busy and quieter periods.

Practical Recommendations for Change Leaders

Having debunked these common assumptions, what should change management and transformation leaders do instead? Here are some actionable strategies:

1. Use Multiple Lenses to Assess Change

- Combine quantitative tools (like heatmaps and data tables) with qualitative insights from employee feedback, focus groups, and direct observation.

- Distinguish between positive and negative impacts, and tailor your analysis to specific stakeholder groups.

2. Get Granular with Impact Assessments

- Move beyond generic H/M/L ratings. Develop more nuanced scales or categories that reflect the true nature and distribution of impacts.

- Segment your analysis by business unit, role, or customer group to uncover hidden hotspots.

3. Map the Full Change Journey

- Track readiness activities, Go-Live events, and post-implementation adoption separately.

- Recognise that the most significant work—both for employees and leaders—often happens outside the Go-Live window.

4. Track All Relevant Initiatives

- Include both strategic and BAU changes in your change portfolio.

- Regularly update your inventory to reflect new, ongoing, and completed initiatives.

5. Customise Adoption Measurement

- Design adoption surveys and feedback mechanisms for each initiative, aligned to its specific objectives and timing.

- Use multiple touchpoints to monitor progress and reinforce desired behaviours.

6. Use Positive, Inclusive Business Language

- Frame change as part of business evolution and operations, not an “impost.”

- Encourage leaders and teams to see change work as integral to ongoing success.

7. Plan for Peaks and Recovery

- Strategically sequence changes to align with business priorities and capacity.

- Build in recovery periods after major waves of change to maintain energy and engagement.

Managing multiple changes in a complex organisation is never easy—but it’s made harder by clinging to outdated assumptions. By challenging these myths and adopting a more nuanced, evidence-based approach, change management and transformation leaders can better support their people, deliver real value, and drive sustainable success.

Remember: Effective change management is not about ticking boxes or flattening curves. It’s about understanding the lived experience of change, making informed decisions, and leading with empathy and clarity in a world that never stands still.

At The Change Compass, we’ve incorporated various best practices into our tool to capture change data across the organisation. Chat to us to find out more.